Six Steps to Connecting SAP and Databricks with OneConnect

Meet the Authors

Key Takeaways

⇨ Direct integration with tools like Onibex's One Connect simplifies the complex process of connecting SAP systems with modern data platforms such as Databricks, minimizing reliance on middleware.

⇨ Real-time Change Data Capture is essential for maintaining data accuracy, allowing not only the initial load of data but also the ongoing updates and deletions, ensuring that the data in the target platform is current and reliable.

⇨ The use of visual, low-code interfaces facilitates quicker implementations and reduces the technical barrier for users, fostering greater participation and speeding up the overall data integration process.

Integrating SAP systems with modern data platforms like Databricks is a critical objective for organizations seeking to leverage advanced analytics and AI. However, this process often involves significant challenges, including complex middleware implementations and extended development timelines.

By utilizing Onibex’s One Connect tool, companies can facilitate direct data integration between SAP and Databricks, supporting both batch and real-time processing, including inserts, updates, and deletes. Here’s how.

Step 1: Entity Setup

The process begins within the SAP system by using the One Connect tool to define data entries through two primary methods:

Explore related questions

- Prepackaged Entities: Onibex provides a substantial library of pre-defined entities covering common SAP objects like Material Master, Sales Orders, and Invoices. These entities come with pre-mapped tables and relationships, reducing the initial setup and mapping effort required as users can simply select the desired entity.

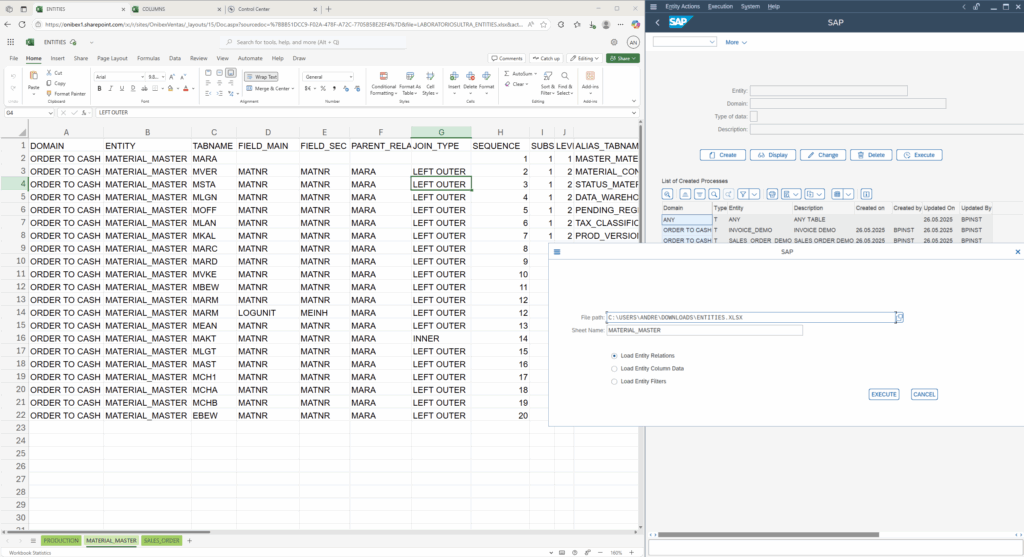

- Upload from Excel: For custom requirements or entities not in the library, One Connect allows users to upload definitions directly from Excel spreadsheets. One Excel file defines the tables comprising the entity and a second defines the specific fields to be included from each table.

Step 2: Data Modeling and Configuration

Once an entity is loaded, users can refine and configure it through:

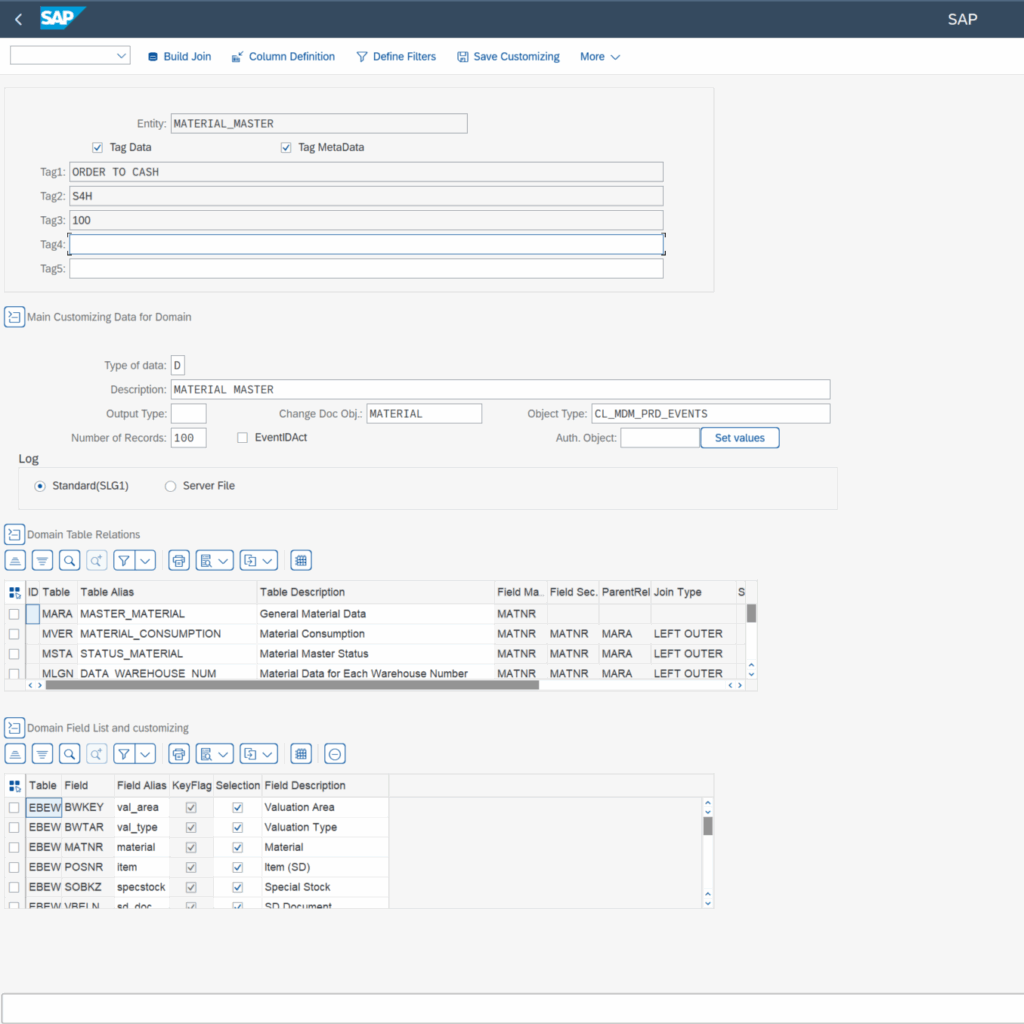

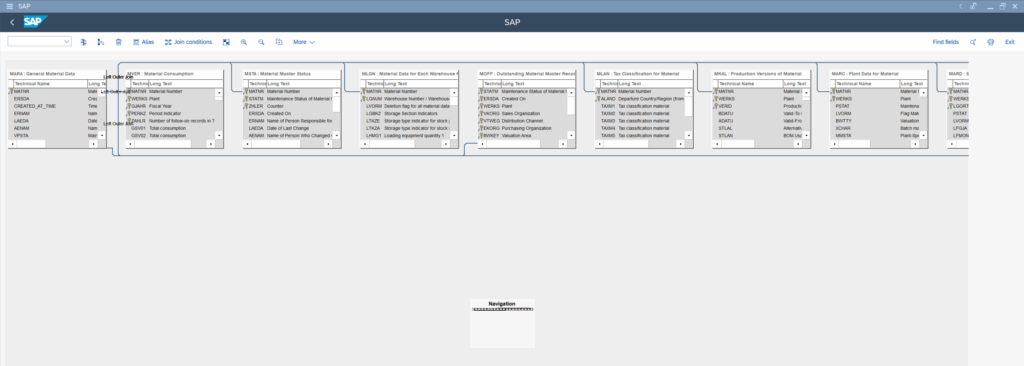

- Visual Data Modeler: Clicking “Build Join” opens a graphical interface displaying the tables and their relationships. This allows users to visually verify or modify the joins established, offering a clear, low-code/no-code view of the data structure.

- Column Definition: Users can access the list of all available and selected columns for each table and double-click to add or remove columns. This eliminates the need for ABAP coding. Aliases are often provided to present SAP technical names in business-friendly terms.

- Entity Configuration: This includes specifying whether key entity characteristics represents Master Data or Transactional Data, adding descriptive text, and importantly, linking it to pre-configured real-time events if applicable. Users can also add Tag Data to include specific fields as tags in the output topic for easier identification or routing.

Step 3: Real-Time Event Configuration

For real-time data flow, One Connect relies on SAP’s underlying event-driven architecture. While users link an entity to an event in Step 2, the setup of these events is an essential backend process. Companies can use specific events and associated ABAP classes defined within SAP to trigger data pushes when changes occur.

Alternately, Onibex provides configurations for many standard events. These configurations are transportable within the SAP landscape so users don’t need to manually configure each event in each system; they can be imported via standard SAP transports, saving effort.

Step 4: Batch Execution with Filters

For scenarios requiring bulk data transfer, One Connect provides a flexible batch execution process:

- Entity Selection: Users select the configured entity like Material Master.

- Filtering: Users can restrict the data set based on various criteria, or specific ranges, ensuring only the required data is extracted.

- Execution Mode: Data extraction can be run immediately for direct feedback or scheduled as a background job for large volumes or off-peak processing.

Step 5: Verifying Data and Schema in Databricks

In this step, the data arrives in the target platform like Databricks, often via a staging layer like Confluent or Kafka. Verification involves running simple queries in Databricks that confirms the arrival and accuracy of the records. Users can also send metadata and managing the Schema Registry through One Connect.

This means the data structure is defined and maintained in the target, ensuring data quality, governance, and compatibility with downstream consumers. Keys are also managed, preserving data relationships.

Step 6: Real-Time Data Creation and Synchronization

Users can perform standard SAP transactions in real time. For example, while creating a new sales order, a user enters a new sales order in SAP and saves it. Due to the pre-configured event linkage in Step 3, this action triggers One Connect. Within moments, a query in Databricks will show the newly created sales order record, demonstrating low-latency synchronization.

One Connect also ensures that changes in SAP are reflected in Databricks. For instance, if a user modifies an existing sales order and saves the changes, the configured real-time event captures these updates. A subsequent query in Databricks will show the sales order data reflecting these exact changes, ensuring the data lake remains an accurate, current representation of the SAP source system.

What This Means for SAPinsiders

Direct integration simplifies complex processes. Modern tools can establish direct connections between SAP and platforms like Databricks, potentially eliminating the need for intermediary middleware layers and simplifying the overall integration architecture.

Full change data capture is achievable. Effective SAP-to-Databricks integration requires handling not just initial loads but also ongoing changes (updates and deletes). Look for solutions that provide robust, real-time Change Data Capture to maintain data accuracy in the target platform.

Utilizing low-code accelerates development. Visual, low-code interfaces, combined with pre-built content and features like field aliasing, can lower the technical barrier for SAP data integration, enabling faster implementation cycles and broader user participation.